Surprisingly I managed to image the PANSTARRS comet this evening even though I was looking in the wrong place!…

Author Archives: steve

Openindiana: How could the developers go so wrong?

Well, today I’ve been playing with OpenIndiana, the OpenSolaris derivative created after Oracle killed off its ancestor.

Well, to say that I was rather disappointed would be an understatement. It’s rather obvious that the developers of the distribution are not system administrators of integrated networked environments otherwise they would not have made such stupid design decisions.

Anyway, here’s the story of my day:

I downloaded the live DVD desktop version initially as I assumed that this would, when installed, effectively replicate a Solaris desktop environment. Seeing as Solaris in this configuration is capable of being a fully functional server as well I assumed that this would be the case for Openindiana.

So, I created a virtual machine under VirtualBox on the Mac, booted the DVD image and started the install. I was surprised about how little interaction there was during the install process as all it asked about was how to partition the disk and to create a root password and a new user. After the install things went down hill.

Now, it seems that the Openindiana bods are trying to ape Linux. When you boot up you get a GDM login screen, but can’t log in as root. So, you log in as the user you created, not too much of a problem, except that you now can’t start any of the configuration applications, they fail silently after you type the root password. You can’t sudo commands as it says that you don’t have permission…

Finally, I managed to get past this roadblock by trying ‘su –‘ which then asked me to change the root password! Once this was done I could actually run the configuration utilities. Not that it got me very much further, as there seems to be no way to set a static IP address out of the box.

I decided to trash that version and download the server version DVD. Maybe that would be better? Surely it would, it’s designed to be a server…

I booted the DVD image and the text installer started, very similar to the old Solaris installer to begin with, except all it asked about again was the disk partitioning, root password/user creation and networking, giving only the options for no networking or automatic network configuration. There was no manual network configuration! What?!!!! This is a server install!

Also missing from the installer was any way of setting up network authentication services or modifying what was installed. The installer had been lobotomised.

Once the OS had installed and booted up there were some more nasty surprises. Again, you couldn’t set a static IP address and any changes to the networking were silently reverted. It was only with some Googling that I managed to hunt down the culprit, the network/physical:nwam service, which is the default configuration. WHY?!!! This is a SERVER not a laptop!

Once this was fixed I managed to at least get a static IP address set up but it’s far more convoluted than with Solaris 10 or before.

Other strangeness in the design… All the X installation is there, except for the X server. Eh? What’s the point of that?

By default the GUI package manager isn’t installed. Once you do, however, it’s set up by default not to see any not installed packages, which is confusing. If you know where to look you can change this but it’s a stupid default.

Getting NFS client stuff working was a challenge as well. When you manually run mount everything seems to work out of the box. NFS filesystems mount fine and everything looks dandy. So, you put some mounts into /etc/vfstab and ‘mount -a‘ works as expected. Reboot, however, and nothing happens! This is due to the fact that most of the NFS client services are not turned on by default but magically work if you run mount. Turning on the nfs/client:default service doesn’t enable the other services it requires, however, but you don’t see this until a reboot. Stupid! It should work the same way at all times. If it works magically on the command line it should work at boot as well and vice versa. Unpredictability on a server is a killer.

On the bright side, at least the kernel is enterprise grade.

Time fos a sound check…

It’s now 12:12:12 on the 12/12/12. i.e.

1 2 1 2 1 2 1 2 1 2 1 2

Mary had a little lamb, it’s fleece was as white as snow.

*tap* *tap* *tap*

Elite: Dangerous, possibly the ultimate Elite-type game?

In a musing on my old blog site situated at LiveJournal eight years ago I outlined my idea for the best multi-player virtual Universe.

In November David Braben announced a new Kickstarter project to attempt to fund the very long awaited fourth Elite game in the series, “Elite: Dangerous” and this time it’s networked!

From the description so far it looks as though the procedural Universe creation is very similar to that I thought of in my original LiveJournal posting all those years ago, at least down to the star system level. At least in the first iteration there’s not going to be any chance to exit your ship and wander around the planets etc. But that’s fine.

The only problem I see is with rather large amount of money the Kickstarter project is asking for, £1.25 million! The fund raising is more than half the way through and still the pledges are only just above the half way mark and the rate of increase is slow. Somehow I don’t see it reaching the funding target by the 5th January.

I’ve put my money where my mouth is and pledged as much as I can reasonably afford. If you too would like to see Elite IV come into being then go over to Kickstarter and help with the fund raising.

Update: New video of gameplay:

Planets a-hoy! The benefits of getting up early.

It was an early start, 4:30am to be precise, but that’s the only time when you can catch anything really photogenic in the sky from my back garden at the moment as I’ve yet to get beyond the light pollution for the deep sky objects.

So, yes, the early start, at “stupid o’clock.”

It was a beautiful morning. The sky had lost all of the high, con-trail derived cloud from the night before, which had obscured practically everything and the air was still. It was chilly enough to need a hat and fleece but otherwise comfortable. The stars shone but were nothing beside Venus, the Moon and Jupiter.

Seeing as Jupiter had for so long been out of view I immediately slewed the telescope around to point to it, looked in the eye-piece, focused and discovered that the shadow of one of the moons was passing across the face and was close to the edge. I needed to be quick to be able to catch it in an image so rushed the “Imaging Source” camera out of its box, fitted the Baader filter and the Powermate 2.5x magnifier and started up the software.

After some critical focusing I pressed the “Capture” button and streams of data passed onto the hard disk. I’d made it. Little did I know that the first real capture was the best of the night, which, after later processing, produced this image:

5:09am: Jupiter with Europa (lower left) and Io (upper right). Europa’s shadow is just leaving the edge of the face of Jupiter.

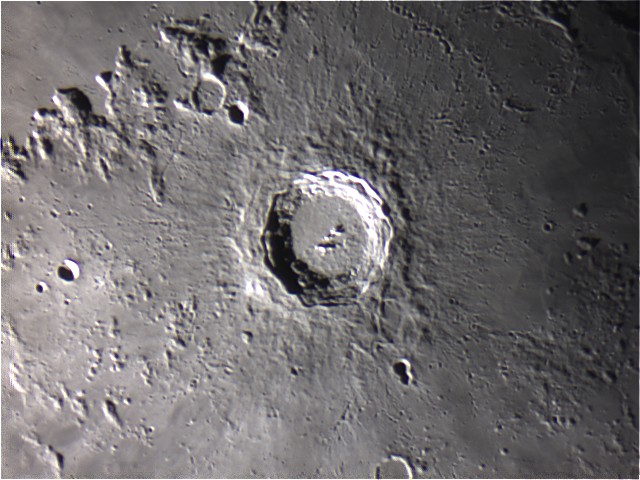

After almost an hour of imaging Jupiter, with the glow of dawn swiftly growing, I turned my attention to the Moon. There, in the stark contrast on the edge of the illuminated half sat the crater Copernicus. Such an intricate crater with its ejector field strewn around it. So, this became my second target of the morning:

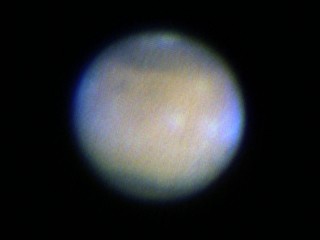

With light levels increasing and sunrise son to be upon me there was only last target, Venus.

Because I’m hardly ever up this early and because I have no view of the western sky from the observatory I’ve never actually imaged Venus before. This time I didn’t bother removing the camera from the focuser but hoped I’d be able to find the planet using the finder scope only. It took a while to fully centre in on it but eventually I did. After a few minutes of tweaking the exposure, I took my final image of the day:

And so, that was that. I stowed away the telescope, shut off everything, closed the roof and came indoors, and off back to bed for a couple of hours.

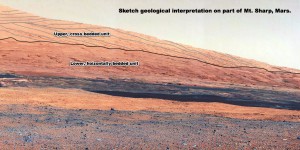

Geological Interpretation of a Curiosity Rover Image.

Having seen some of the glorious images sent back by NASA’s Curiosity (Mars Science Laboratory) rover and the rather (geologically) uninformative BBC News and NASA/JPL reports upon them I thought I’d create a geological sketch interpretation. So, here it is:

Horizontally bedded rocks on Earth are generally laid down in water, so the lower unit was most likely to have been deposited in a wet environment.

Large scale cross bedded rocks are usually sub-arial wind-blown deposits, as in sand dunes. The bedding being the shadow of the trailing slope of the dunes as they march across the landscape. What you see left behind is the root of the dune.

The scale in the image is hard to gauge but seeing as the scarp is a couple of miles from the rover, the size of the dunes which generated the cross bedded units must have been many hundreds of metres high.

It should also be noted that the top of the lower, horizontally bedded unit seems to be an uneven erosional surface, suggesting a large time gap between the lower unit’s deposition and that of the upper unit.

Astronomical events: February to May

My last update on my astronomical exploits was way back at the beginning of February. At that point I’d just got the new telescope installed and Mars was getting closer to opposition.

Between then and the end of May was quite a busy time in the sky when it came to planetary observation as Mars continued to be visible from my back garden for much of the time and Saturn came out to play as well. Unfortunately, after the third week in May both planets were obscured by the house by the time dusk fell. Even so, I managed to get some decent images.

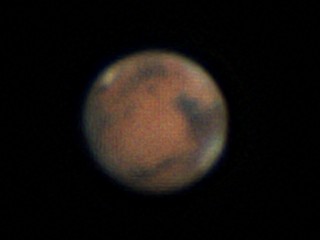

Mars

I’d already managed to get one good image of the planet by the beginning of February but due to cloud cover the the next opportunity wasn’t until early March. Thankfully, there was a short period of very good seeing, allowing me to even image clouds developing within the atmosphere.

Details on the surface weren’t that clear, but that was partly due to the low quality of the camera and its low speed with the light levels available. Once the atmosphere became more turbulent the results were no-where as good.

So, I decided to buy a better quality planetary camera. The resolution was still 640×480 but its noise levels were far lower, so that even without getting the colour balance correct on the first go I managed to get a far better image:

Unfortunately, by the end of March the weather closed in and the next time I could get out to view the sky was in May.

By the middle of May the planet was rapidly receding from view, markedly shrinking and becoming harder to image, especially as by the time it was visible it was almost behind the house. However, because of the change of angle, it was far easier to see that it was indeed a sphere as it was easy to see its phase:

Before long, however, it became impossible to image.

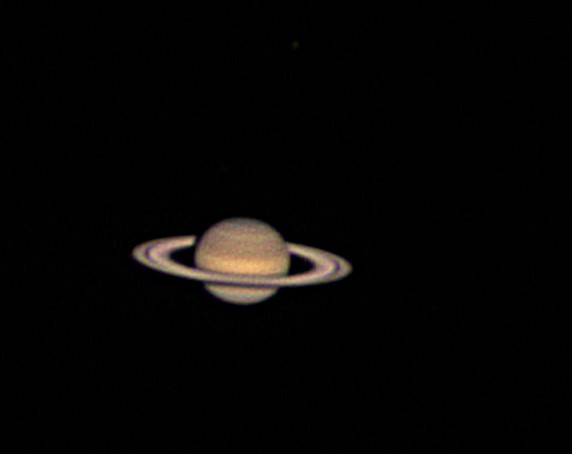

Saturn

Saturn wasn’t easily visible from my garden until the clouds cleared in May, which gave me only a few short weeks in which to view and image it before it too disappeared behind the house and into the dusk. Also, most evenings the sky was just too unstable to get decent images of the planet given the amount of magnification required. Having said all that I did manage a few really pretty decent images such as this:

Weather based computing definitions.

Cloud Computing

A computing resource located “out there” somewhere, connected to the Internet and operated by a third party.

When the heat is on, just like real clouds, they can either evaporate or become a storm (see Monsoon Computing). In either case it’s not good news.

Fog Computing

Like Cloud Computing but down to earth. i.e. based in reality and generally under the organisation’s direct control. Often called a Corporate Cloud Computing resource.

This generally hangs around longer than is required but never lets the temperature get too high.

Mist Computing

You’re sure that you purchased the equipment for your corporate cloud computing resource, but you can’t see very much of it and it’s not a lot of use.

Very Light Drizzle Computing

You’re pretty sure that there must be a computing resource somewhere, you can feel it, but you can’t find it.

Drizzle Computing

You seem to have a large number of light-weight and low powered computing systems for your processing. However, all they seem to do is annoy you and never actually do anything useful.

Rain Computing

You have a large number of independent computers all working to solve your problem, or at least dissolve it.

Stair-Rods or Monsoon Computing

Somehow you seem to have huge numbers of high power processors on your hands, all working on your problem uncontrollably. Unfortunately, the upshot of this is that your problem isn’t solved, it’s washed away by the massive deluge of cost and possibly information overload.

So, do you have any more/better amusing definitions for weather analogous computing names? If so post them as comments below.

NotSoBASIC

As discussed in a previous posting, I’ve been musing over the development of a modernised version of the classic procedural BASIC language, especially with the Raspberry Pi in mind.

With this in mind I’ve been setting out some goals for a project and working a little on some of the syntactical details to bring structures, advanced for-loop constructs and other modern features to a BASIC language as backwardly compatible with the old Sinclair QL SuperBASIC as possible.

So, here are the goals:

- The language is aimed at both the 13 year old bedroom coder, getting him/her enthused about programming, and the basic needs of general scientist. (Surprisingly, the needs of these two disparate groups are very similar.)

- It must be platform agnostic and portable. It must also have a non-restrictive, encumbered license, such as the GPL, so probably Apache, so as to allow it to be implemented on all platforms, including Apple’s iOS.

- It must have at least two, probably three, levels of language, beginner, standard and advanced. The beginner would, like its predecessors in the 8bit era, be forced to use line numbers, for example.

- It must have fully integrated sound and screen control available simply, just as in the old 8bit micro days. This, with the proper manual, allow a 13 year old to annoy the family within 15 minutes of the person starting to play.

- The graphical capability must include simple ways to generate publishable scientific graphical output both to the screen and as encapsulated Postscript, PDF and JPEG.

- The language must have modern compound variables, such as structures, possibly even pseudo-pointers so as to be able to store references to data or procedures and pass them around.

- The language should be as backwardly compatible with Sinclair QL SuperBASIC as possible. It’s a well tested language and it works.

- The language should be designed to be extendable but it is not envisaged that this would be in the first version.

- The language IS NOT designed to be a general purpose application development language, though later extensions may give this ability.

- The language will have proper scoping of variables with variables within procedures being local to the current call, unless otherwise specified. This allows for recursion.

- All devices and files are accessed via a URI in an open statement.

- Channels (file descriptors) must be a special variable type which can be stored in arrays and passed around.

As I said earlier, I’ve been thinking about how to do a great deal of this syntactically as well. This is where I’ve got so far:

[Edit: The latest version of the following information can be found on my website. The information below was correct at 10am 23rd February 2012.]

Variables.

Variable names MUST start with a alphabetic character and can only contain alphabetic, numeric and underscore characters. A suffix can be appended so as to give the variable a specific type, e.g. string. Without a suffix character the variable defaults to a floating point value.

Suffixes are:

$ string

@ pointer

Compound variables.

Compound variables (structures) can be created using the “DEFine STRUCTure” command to create a template and then creating special variables with the “STRUCTure” command:

DEFine STRUCTure name

varnam

[…]

END STRUCTure

STRUCTure name varnam[,varnam]

An array of structures can also be created using the STRUCTure command, e.g.

STRUCTure name varnam(3)

The values can be accessed using a “dot” notation, e.g.

DEFine STRUCTure person

name$

age

DIMention vitals(3)

END STRUCTure

STRUCTure person myself, friends(3)

myself.name$ = “Stephen”

myself.age = 30

myself.vitals(1) = 36

myself.vitals(2) = 26

myself.vitals(3) = 36

friends(1).name$ = “Julie”

friends(1).age = 21

friends(1).vitals(1) = 36

friends(1).vitals(2) = 26

friends(1).vitals(3) = 36

As with standard arrays, arrays of structures can be multi-dimentional.

Structures can contain any number of standard variables, static arrays types and other structures. However, only structures defined BEFORE the one being defined can be used. Structure definitions are parsed before execution of the program begins. Structure variable creation takes place during execution.

Loops.

FOR/NEXT:

FOR assignment (TO expression [STEP expression] | UNTIL expression | WHILE

expression) [NEXT assignment]

..

[CONTINUE]

..

NEXT [var]

The assignment flags the variable as the loop index variable. Loop index variables are normal variables.

The assignment and the evaluation of the assignment expression happen only once, when entering the loop. The test expressions get evaluated once every trip through the loop at the beginning. If the TO or UNTIL expressions evaluate to zero at the time of loop entry the commands within the loop do not get run.

The STEP operator can only be used if the loop index variable is either a floating point variable or an integer. The expression is evaluated to a floating point value and then added to the loop index variable. If the loop index variable is an integer then the value returned by the expression stripped of its factional part (as with ABS()) before being added to the variable.

WHILE/END WHILE:

WHILE expression [NEXT assignment]

…

[CONTINUE]

…

END WHILE

Equivalent to a FOR loop without an assignment using the WHILE variant e.g.

x = 10

WHILE x > 3 NEXT x += y / 3

…

END WHILE

is equivalent to

FOR x = 10 WHILE x > 3 NEXT x += y / 3

…

NEXT

DO/UNTIL:

DO

…

[CONTINUE]

…

UNTIL expression

The commands within the loop are run until the expression evaluates to a non-zero value.

Functions and procedures.

A function is merely a special form of a procedure which MUST return a numeric value. The suffix of a procedure determines its type, in the same way as variable names.

DEFine PROCedure name[(parameter[,parameter[…]])]

…

[RETURN expression]

END PROCedure

DEFine FUNction name[(parameter[,parameter[…]])]

…

RETURN expression

END FUNction

Parameters are local names with reference the passed values by reference. This means that any modification of the parameters within the procedure will change the value of any variables passed to it.

Variables created within the procedure will be local to the current incarnation, allowing recursion. Variables with global scope are available within procedures but will be superseded by any local variables with the same name.

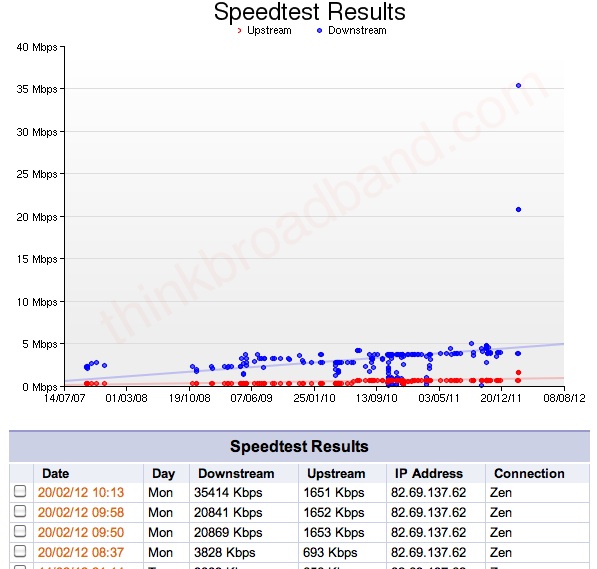

Joining the fast lane: Fibre to the Cabinet broadband Internet access is here.

Well, after quite a wait the Cowley BT telephone exchange has finally been enabled for Fibre to the Cabinet (FTTC) broadband. Even using BT’s own estimate, the exchange has been nearly two month late coming on-line.

So, what does having the new service involve?

Well, other than a hefty £80+VAT fee, it merely requires a BT Openreach engineer to visit your house and install a modem and additional face-plate filter onto the house’s master “line” socket and then go to the street cabinet containing your connection to rewire it. You will also need a firewall/router which can talk PPPoE. In other words, one which can use a network cable instead of a phone cable. These are the same as those used with Virgin Media cable-modems.

Although BT (via your ISP) will inform you that the process will take up to an hour, in fact it takes a lot less time than this, It’s about 5 minutes for the engineer to unpack the new modem and fix the faceplate and then a further 10 minutes while he hunts for the correct street cabinet and re-wires your phone line. Assuming that you have your router fully set up beforehand that’s it. He just does a few tests and leaves.

In my case, I had a Billion BiPAC 7800N router which can do both ADSL (phone line) and connect via a network cable so all I needed to do was change a setting and reboot it.

So, this, after some tidying, is my new communications system:

Now that everything’s wall mounted and I’ve put all the wires into a conduit it looks a whole lot neater than before. Also, it’s unlikely to be knocked or cables snagged.

At the top of the picture you can see the Billion router. It’s not much to look at but it is a superb router. I do like the way that it can be mounted vertically on the wall, thus taking less space laterally.

Below the router is the BT modem. Thankfully this is the mark 3 model so is less likely to die horribly.

Finally, connected directly into the wall power socket is the Devolo 200Mb/s power line networking module. This connects to a similar unit in the spare bedroom, where my server sits, and to a multi-port power-line network switch in the living room to which is connected the TV, PS3 and amplifier.

So, what does all this shiny new equipment give me over and above what I had before? Other than the 10 times download speed increase and the four times upload speed jump, it also means that the connection should be far more stable. I’m also only paying about £3 more for this service than I was for the ADSL MAX service I was previously on and I get an extra 30GB of download quota bundled in with it.

Basically, I’m happy with it and that’s all that matters.

[Edited to add historical broadband speed test data]

Elite: Dangerous

Elite: Dangerous